A History of the Internet

From four computers to four billion

Jul 15, 2024 | Share

Brand Guides

The internet is a ubiquitous part of most people’s lives, and while it has been around in some form for over 50 years, the structure of the internet and its place in society has made some very dramatic shifts during that time. If you’ve ever wondered why the internet is the way it is or where it might be heading, then keep reading as we go through the history of the internet from its inception to the present day.

On this page:

Computers before the Internet | Early networks | Internetworking | The World Wide Web | The Dot-Com Bubble | The internet takes over | Web 2.0 and the Cloud | The internet today

On this page:

- Computers before the Internet

- Early networks

- Internetworking

- The World Wide Web

- The Dot-Com Bubble

- The internet takes over

- Web 2.0 and the Cloud

- The internet today

Computers before the internet

During the first half of the 20th century, electronic computers began to replace earlier mechanical computers. These huge machines, like the ENIAC (Electronic Numerical Integrator and Computer) and the NORC (Naval Ordnance Research Calculator), were most often used for ballistic calculations, although they could also be used for other complicated calculations in fields like statistics, astronomy, and codebreaking.

During the Cold War, these computers got even bigger and more powerful, culminating with the Semi-Automatic Ground Environment (SAGE). This continent-spanning system had 24 of the largest computers ever built, each one weighing 275 tons, taking up a half acre of floor space. The purpose of SAGE was to defend against a possible Soviet air attack—tracking incoming aircraft, predicting interception points, and calculating missile trajectories. The radar stations, tracking consoles, teleprinters, and other equipment were connected by a huge network of copper telephone wires.

The idea of a single computer being operated by multiple consoles led to the shift from huge single-purpose computers to general-purpose mainframe computers. These computers required operating systems that could multitask and handle different inputs coming from different users in real time.

In 1960, Dr. JCR Licklider, an MIT professor working on the SAGE project published “Man-Computer Symbiosis,” a paper in which he argued that computers could be used not just for calculating the solutions to problems that humans had already figured out, but for helping people come up with the actual solutions to problems they were working on. In this paper, he proposed the idea of having huge libraries of information that could be accessed by individual users across broadband communication lines, as well as concepts like natural language processing, real-time collaborative whiteboarding programs, and speech recognition that have only become widely available in the last decade or so.

By the mid-1960s, all the ingredients needed for the internet were starting to come together. Computer hardware and software were becoming more versatile, the concept of the computer’s role in society was changing, and there was a strong political will to invest resources into both research and infrastructure due to Cold War tensions. The only thing left was to build the actual networks.

Early networks

Wired communication networks in the US began with the telegraph and the telephone in the 1800s. These systems worked by creating a single, dedicated connection between the devices on either end. Relay stations, switchboard operators, and later automated telephone exchanges allowed people to connect to different destinations, but every call consisted of a direct, physical connection between the two endpoints.

One weakness of this design is that cutting the line at any point breaks the communication—a problem the US military had been dealing with since the Civil War. The huge networks of SAGE and other communication systems still relied on these direct connections to link their facilities together.

Distributed networks

In the early 1960s, Paul Baran, an engineer at the RAND Corporation, suggested a method to maintain reliable communication in a network made of unreliable components. With fears of nuclear war with the Soviet Union on the rise, Baran was concerned that even a single nuclear strike could split nationwide networks into multiple pieces that had no way of communicating with each other.

Baran proposed designing distributed networks that could easily route messages around damaged nodes. This meant that even in the event of massive damage to communication infrastructure following a nuclear attack, military coordination, economic activity, and civic participation would still go on.

In order for messages to navigate a distributed network and still arrive at their intended destination, message blocks would need to be standardized and given what Baran called “housekeeping information” to keep track of things like routing information and error detection. This approach would come to be known as “packet switching,” which is still how information travels across the internet.

Perhaps more important than its ability to survive a nuclear war, this distributed approach to communication meant that many simultaneous users with different equipment, different connections, and different needs could all use the same broadband network.

ARPANET

In 1958, The US Department of Defense created the Advanced Research Projects Agency (ARPA) as a response to the panic over the Soviet Union’s launch of Sputnik. Secretary of Defense Neil McElroy hoped that putting ARPA in charge of military research and development would stop the other branches of the military from competing over space and missile defense programs (the Army and Navy each launched their own satellite that year). Although most space projects would be given to NASA, which was established a few months later, ARPA established offices to study technologies like lasers, material science, and chemistry. It also established the Information Processing Techniques Office for studying computers.

This office actually came about not because of an ARPA initiative, but because the Air Force had somehow ended up with an extra computer—one of the massively expensive 275 ton computers from the SAGE program. Whether out of generosity or embarrassment, the Air Force gave the computer to ARPA, which then hired JCR Licklider as the director of the new program.

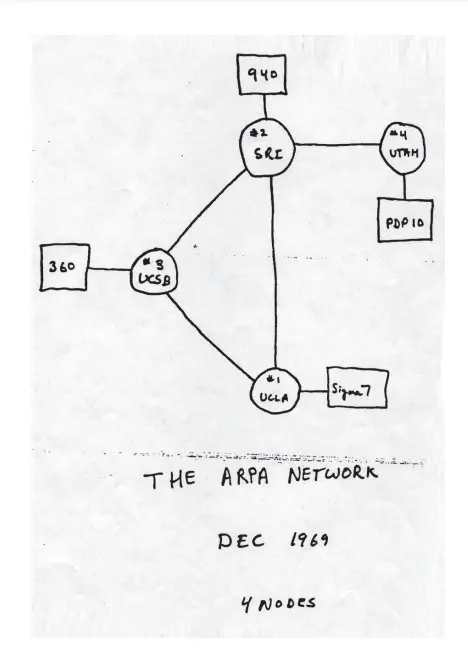

The first four nodes of ARPANET. Image provided by DARPA.

In 1966, researchers at ARPA began developing a distributed packet-switched network they dubbed ARPANET. The project was initiated by Bob Taylor, who had taken over the role of director of the Information Processing Techniques Office. Taylor wanted to realize Licklider’s vision of a vast network of computers that allowed access to vast amounts of information from anywhere in the world. He also hated having to get up and move to another terminal every time he wanted to talk to someone on a different computer.

When ARPANET went online in 1969, it had just four nodes—UCLA, Stanford Research Institute, UC Santa Barbara, and the University of Utah—each one having a single computer. Within a few years, the network grew to dozens and then hundreds of computers in universities, government offices, and research institutes.

Internetworking

By the early 1970s, there were several computer networks in operation around the world, including the Merit Network in Michigan, NPL network in the UK, and the CYCLADES network in France. Although these networks all used standardized rules, or protocols, for creating data packets, each network had its own protocols, which made it difficult to connect them.

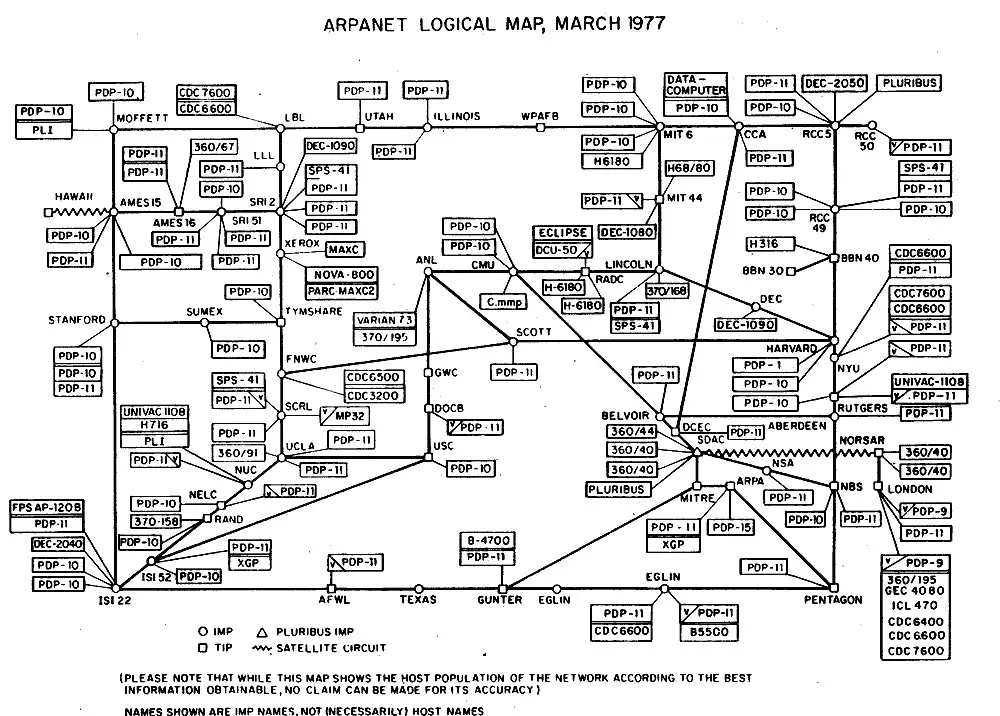

ARPANET Logical Map, March 1977. Image provided by DARPA.

Internet protocols

Back at ARPA, which was now called DARPA (the Defense Advanced Research Projects Agency), Bob Kahn, an engineer with the Information Processing Techniques Office, had been tasked with creating a satellite network to connect ARPANET to the British NPL network. In order to join these different networks, Kahn and fellow engineer Vint Cerf had to create protocols that both networks could use to communicate with each other.

The CYCLADES network in France was already experimenting with more flexible protocols. The computers that made up its network weren’t as powerful as those on ARPANET, so its protocols needed to be simple and efficient. CYCLADES was also created with connections to other networks in mind.

It achieved these goals by adopting the end-to-end principle, where the devices on either end of a connection assumed the responsibility for ensuring things like reliability and security. In contrast to ARPANET’s protocols, in which each computer had to perform tasks like error checking for every packet that it passed along, computers on the CYCLADES network would simply pass packets along toward their destination as quickly as possible. Only the sender and receiver would perform error checking and they only had to check with each other to confirm that the information had arrived correctly.

Khan and Cerf would take a similar approach in designing their protocols, the Transmission Control Protocol (TCP) and the Internet Protocol (IP). TCP/IP is still at the heart of internet communications today, enshrining the end-to-end principle in the internet itself, which in turn is one of the major technical arguments for the principle of net neutrality.

Routers

Another development that helped facilitate the linking of computer networks was the creation of computers designed just to manage the flow of information between other computers on the network. While the growth of ARPANET had steadily increased since its creation, it had thus far mostly grown one computer at a time and spread out across the whole network.

Connecting another network to ARPANET meant adding dozens or hundreds of computers at once, with all that new data flowing through one point. To manage this flow of information, new computers were created whose only purpose was to serve as connection points. Once again following the end-to-end principle, these specialized computers wouldn’t retain information about the messages they handled, they would just try to get them to their destination as quickly and efficiently as possible.

Though often called gateways or black boxes, these computers would eventually come to be known as routers. Today, this technology not only manages traffic throughout the backbone of the internet, but it’s also the same technology that led to your home router, which manages the traffic between your home network and the rest of the internet.

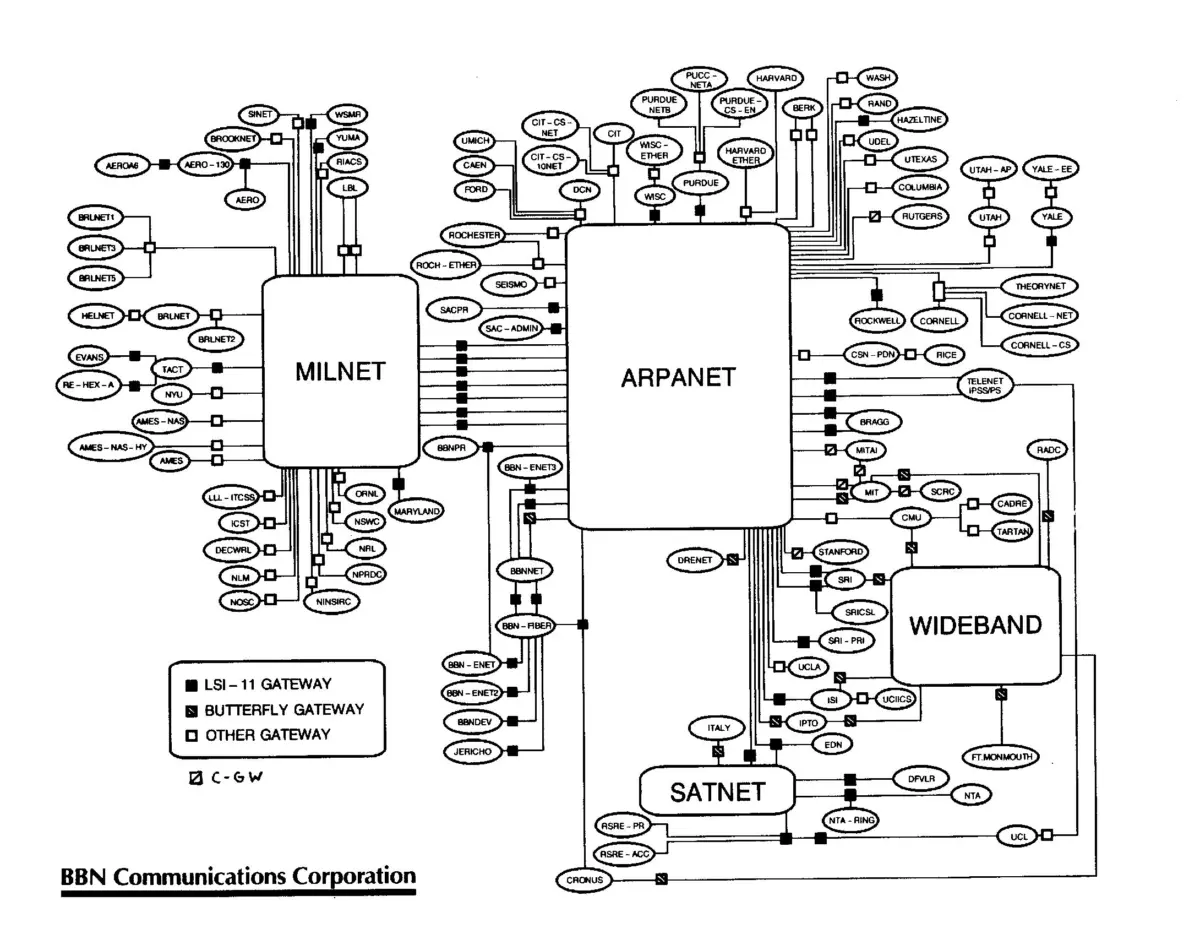

Map of the Internet, 1986. Image provided by Bob Hinden of Bolt Beranek and Newman.

A true internet

With new hardware and new protocols for communication, research networks across the world soon began to connect with one another, gradually adopting TCP/IP and other universal standards. As this internetworking became more and more important, users didn’t just talk about logging on to their own networks, but to a worldwide internet.

The World Wide Web

The internet continued to grow throughout the 1980s, though it was still primarily made up of government agencies, research organizations, and a few computing-related businesses. Its main purpose was research and education. Commercial activity was forbidden.

The rapid growth of the internet made finding information a lot more difficult. Initially, users simply knew every computer on the internet and they could print out a literal map of how they were connected. This quickly became impossible, so new ways of connecting and organizing information on the internet had to be created.

Interacting with computers and information

Throughout the 20th century, scholars had been theorizing ways to give people access to information more efficiently. Though many of the machines they envisioned would never be built, these ideas would go on to inspire actual computer technology.

In 1945, Vannevar Bush, who headed US research during World War II, wrote an essay in which he proposed a mechanical device that would organize documents with codes that the user could input to have the document displayed on a screen using microfilm. In the 1960s, Douglas Engelbart, an engineer at the Stanford Research Institute (one of the first four nodes on ARPANET), built upon Bush’s idea of codes and symbols as not just a way to organize information for the user, but to interconnect ideas within documents to allow for collaboration. Engelbart’s group, the Augmentation Research Center, would also invent the computer mouse as a way to improve the way people could interact with computers.

At the same time, sociologist Ted Nelson was also building off of Bush’s idea, designing hypothetical systems of connecting documents and images in a way that could only be done with computers. Since this interconnected material contained more meaning than could be easily represented by just printing it out on paper, he called them “hypertext.”

These concepts would lead to early markup languages and hypertext systems, which allowed for people to organize information on their own computers. They would, however, become much more important to the rapidly growing internet.

Hypertext and the Web

In the 1980s, Tim Berners-Lee, a physicist at the European Organization for Nuclear Research (CERN) in Switzerland was experimenting with hypertext for organizing his own databases. He also thought that this same technology could be used to organize information on the internet.

Berners-Lee called his new information system the World Wide Web, which consisted of four main technologies:

- A way to locate information (URL)

- A way to transfer information (HTTP)

- A way to present information (HTML)

- A program that could run all this on a personal computer (web browser)

A uniform resource locator, or URL, is basically a web address, like “wikipedia.org” or “highspeedinternet.com,” but URLs aren’t just for websites. Every page of text and every image you find on the web has to have its own unique URL in order for your computer to find it.

Hypertext Transfer Protocol, like other internet protocols, is a set of rules for how data is sent across the internet. It specifies the way that information is communicated, allowing users, for example, to click on links using their mouse and be directed to a new webpage.

Anyone who’s ever built (or tried to build) a webpage has some familiarity with HTML. Hypertext Markup Language allows you to take a bunch of information and mark individual parts to indicate that certain words are a heading, that other words are meant to be a paragraph, and others might be links to other pages.

Berners-Lee also created the first web browser, simply called WorldWideWeb, but others would soon follow, such as Mosaic (which would later become Netscape) and Microsoft’s Internet Explorer.

The World Wide Web launched in December 1990 with CERN hosting the first website on the first web server. The World Wide Web made the internet much easier to use. You no longer had to be a scientist to understand how to get online. This was the point where the internet we know today began to take shape.

The Dot-Com Bubble

Now accessible to the general public, the internet exploded in the 1990s. Early online news sites like Bloomberg and Wired went online for the first time. Tech companies like Apple and IBM established their own websites. Megadeth became the first band to have its own webpage. And, of course, tons of individual people created their own sites that ranged from personal to very silly.

In 1992, Congress officially allowed government networks that had previously banned commercial activity to freely connect to commercial networks. Congress also passed the Telecommunications Act of 1996, which set up the laws that govern the internet today, giving internet companies some protections in exchange for setting up systems like copyright strikes. These new laws, along with a booming US economy made way for a huge internet boom, as well as the seeds of a massive crash.

The internet gold rush

As many people in the US were discovering the internet for the first time, companies were racing to create an online presence and investors were pouring money into anything internet related. Since the URLs for commercial websites were supposed to end in “.com,” new online tech companies were often called “dot-com” companies.

During this period, stocks for tech companies were skyrocketing and everyone on Wall Street wanted to get a piece of it. Venture capitalists and investment bankers wanted to invest in anything with a website—even if it wasn’t clear how having a website would actually make money.

Dot-com companies, meanwhile, didn’t really need to have a plan to make money. Investors wanted growth, and growth was easy as long as you had investors giving you tons of money. New internet companies were spending money as fast as they could. Perhaps the most infamous was “Pets.com,” which was, at least in theory, just a site to buy pet supplies online, but received hundreds of millions of dollars in investments, which it spent, in part, on a massive advertising campaign, including a Super Bowl commercial.

The bubble bursts

While the stock market was going crazy for the idea of online stores, very few people actually shopped online in the 90s the way that they do today. As soon as investments slowed down, many of these companies were in trouble, because they were still years away from even breaking even.

One by one, dot-com companies started to go bankrupt. Pets.com went out of business less than a year after going public on the NASDAQ stock exchange. Many previously hyped internet brands disappeared or were absorbed into other companies. The dot-com bubble sent shockwaves across the internet and sent the US and many other countries into a recession.

The internet takes over

Although the dot-com bubble wiped out a lot of companies, others, like Amazon and Google survived. Meanwhile, people in the US continued the adoption of home internet and internet providers continued expanding their networks. Slow dial-up services were soon made obsolete by DSL and cable internet connections.

Are you still getting the same internet speeds you had 20 years ago?

If so you might want to see if better options are available in your location.

Broadband becomes the norm

Faster internet connections opened up new possibilities for what people could do online. Netflix, which originally just mailed DVD rentals to subscribers, launched its streaming service in 2007. At the time, few people had enough internet speed to stream video online, but within a few years, streaming had completely eclipsed its DVD-by-mail service.

In 2005, YouTube was founded, allowing people to upload their own videos to the internet. YouTube was acquired by Google in 2006, which helped turn YouTube into one of the biggest sites on the internet.

An internet connection also became essential for day-to-day communication. Email, which had existed since the days of ARPANET, but didn’t become widely adopted until web-based email services like Hotmail and Gmail. Instant messaging services had a similar burst of popularity in the 90s and early 2000s.

The internet also became more essential for education. While computers had been a part of classrooms since the days of Oregon Trail back in the 1980s, homework and research was moving from the school library to the internet. Wikipedia launched in 2001, becoming the starting point (and often the ending point) for every high school essay.

Online games also became more popular as internet access expanded. World of Warcraft launched in 2004, spawning a huge wave of massively multiplayer games. Tools like Java and Macromedia Flash also led to an explosion of browser-based games by independent developers, and later companies like Popcap and Zynga.

From brick and mortar to virtual shopping carts

Although online shopping wasn’t big enough in the 90s to justify Super Bowl commercials and balloons in the Macy’s Thanksgiving Day Parade, it continued to slowly gain popularity. Amazon, which had started as an online bookstore, expanded its catalog to become a massive online store for buying everything from household items to electronics to car parts. Sites like eBay and Etsy also took off in the 2000s, which made it possible for anyone to start selling products online.

This shift to online shopping had a serious impact on traditional brick and mortar stores, which is still continuing today. Most large retail companies like Walmart and Barnes & Noble created their own online stores, while many companies that couldn’t compete with the likes of Amazon simply went out of business.

In 2003, Apple launched the iTunes Store, which sold individual songs for 99 cents and was designed to work seamlessly with Apple’s iPod. The music industry had been relentlessly going after online music piracy since the rise of file sharing programs like Napster in the late 90s, but Apple’s entry into the realm of digital distribution upended the entire industry. Speaking in 2011, Miramax CEO Mike Lang called Apple “the strongest company in the music industry”

By the mid-2000s, the internet had managed to touch almost every part of life for those living in the US. The internet was no longer a luxury, it was a necessity for participating in everyday life. There was now a deep digital divide which meant that those without access to computers and the internet were at a severe disadvantage. At the same time, deregulation in the 80s and 90s had allowed tech companies and internet providers to grow into virtual monopolies, compounding these issues.

Web 2.0 and the Cloud

As the Web grew in social and economic importance, new technologies emerged to create more dynamic online experiences. Often labeled with catchy buzzwords like “Web 2.0,” these changes emphasized streamlining user experience and creating user-generated content.

While this shift was often promoted or romanticized as egalitarian, community driven, and empowering, it also eroded user privacy, blurred the lines between work and leisure, and created a whole lot of billionaires while the rest of the country went through an economic crisis.

A return to standards

As anyone who grew up on the 90s internet can tell you, it was kind of the Wild West. Tech CEOs were running rampant across the web just as much as unsupervised teenagers, and they created an even bigger mess. The scientists who built the internet did so by creating detailed protocols and standards that kept everything running smoothly. Once the internet was out of their hands, tech companies kind of did whatever they wanted.

Microsoft won the browser wars of the 90s by leveraging the monopoly of its Windows operating system. This got them sued for antitrust violations, but it also got Internet Explorer a 95% market share.

Speaking from experience, being a web developer in the 2000s could be miserable. And most of that misery came from Internet Explorer. Microsoft didn’t follow the established standards and protocols very strictly, often not supporting standard features or adding their own. Unless developers wanted their websites to display incorrectly for 95% of people, they had to program in workarounds to deal with the particular quirks of Internet Explorer.

One solution to this compatibility problem was to use proprietary plugins like the Macromedia (later Adobe) Flash Player, which then added even more company-specific elements to the Web. This meant that by the early 2000s, many websites wouldn’t load correctly if you were using the wrong browser or didn’t have the correct plugins installed.

To fix this problem, developers and computer scientists, along with organizations like Tim Berners-Lee’s World Wide Web Consortium (W3C) began pushing for web pages to be standards compliant, making use of technologies like JavaScript, PHP, and CSS. They also began working toward new standards like HTML5, that would be able to replace functionality that then required plugins like Flash.

Social networks and user participation

More dynamic web technologies made it possible for users to contribute more to the web without needing the same technical expertise as a web developer. Blogging software, for example, allowed users to create web pages by simply entering text in an online form, rather than creating an HTML document and uploading it to a server. The work of making the user’s plain text into a proper HTML page was all done behind the scenes with databases and PHP, so very little technical skill was required.

Similarly, social networking sites allowed users to post small, but regular updates, while also allowing them to find and connect with friends. While this concept could be traced all the way back to Bulletin Board Systems and other services that predate the World Wide Web, the first site to gain widespread popularity was MySpace launched in 2003, followed soon after by Facebook. Twitter, LinkedIn, Reddit, and many other such services also appeared in the 2000s.

Like the dot-com companies of the 90s, social networks’ rapid growth attracted a lot of attention from investors. Many of them, however, were able to become somewhat stable, if not actually profitable, by selling targeted advertisements. Since all the text, pictures, and information that users uploaded to these sites was stored on the social media site’s servers, these companies had access to a lot of very personal information. Advertisers were willing to pay a lot more to show their ads to very specific groups like “divorced goth baristas with a masters degree” than to “people watching TV at 9pm.”

While many of these sites initially offered privacy controls, these tended to disappear as the value of all that personal data became apparent. Facebook became infamous for constantly changing its privacy policies to slowly remove users’ privacy controls. Sites also implemented more nefarious tactics like using HTTP cookies (originally designed to help users to stay logged into shopping sites while they moved between pages) to track people as they moved across other sites.

Despite these issues, social networking sites became some of the most popular sites on the internet, and the companies behind them grew into tech giants.

Smartphones

In 2007, Apple unveiled the iPhone, a sleek smartphone that used a touchscreen, rather than a keypad or a stylus. It combined the functionality of a cell phone with that of an iPod, a personal digital assistant (PDA), and a digital camera. It wasn’t the first smartphone; in fact, BlackBerry smartphones had become somewhat popular among business people and the chronically online. Nothing about the iPhone was particularly new or innovative and, as Microsoft CEO Steve Ballmer mockingly pointed out, at $500, it was incredibly expensive for a phone.

To Ballmer’s surprise, the iPhone was a huge success, and soon other phone manufacturers and tech companies, including Microsoft, were hurrying to catch up. In 2008, Google introduced the open-source Android operating system, which was soon used by a number of different phones. Google launched its own line of Nexus phones in 2010.

At the time of the iPhone’s launch, the current 2G cellular technology in use by phone carriers in the US wasn’t equipped to use the internet. 2G phones could send text messages, but not access the internet. Although 3G technology had been around for a few years, few people were buying 3G phones and phone carriers weren’t in a rush to upgrade their networks. Smartphones would change all that.

Apple’s second model, the iPhone 3G was launched a year later in 2008 and came with (you’ll never guess) 3G technology. From that point on, internet access would be considered a basic feature for any smartphone and soon, many of us would be constantly online, regardless of where we were.

Another feature that came standard on the iPhone 3G was the new Apple App Store, which had officially launched the day before. Like songs in the iTunes Store, most apps cost 99 cents and Apple fans that were used to filling their iPods one song at a time were now eager to fill up their expensive new phones with whatever available.

At the time, I was mostly working in Flash development, but every programmer I knew was talking about the booming market for mobile apps and games. It was common to hear stories of developers releasing a simple app like a “tip calculator” (which would be an easy project for a high school programming class) and selling a million copies.

It’s hard to overstate the impact that the iPhone had on not just the mobile phone market, but on the videogame industry, user interface design, and the internet in general.

The Cloud

Just as posting on blogs or social networks was easier for the average person than setting up their own web server, businesses were equally keen to let someone else handle their data rather than building and maintaining their own data center.

In 2002, Amazon launched Amazon Web Services (AWS), which not only allowed companies to rent storage space for their data but also let them rent databases, send automatic email to customers, cache data in different locations to reduce latency, rent processor time to perform large computations, and much more.

This model, called cloud computing, was not only valuable to small businesses that couldn’t afford a data center, but also to large companies that wanted flexibility. If your app grew twice as fast as you had expected, you could simply pay Amazon to spin up a bunch of new servers almost instantly. If your business lost a bunch of traffic, you could tell Amazon you wanted to reduce your number of servers to minimize costs. For many companies, this flexibility was well worth the expense of paying Amazon to handle your data.

In addition to AWS, other cloud computing platforms like Microsoft Azure and Google Cloud were launched in the late 2000s as more and more businesses decided to move their data into “the Cloud.” While this made software sound like it existed in some ethereal place, it tended to obscure the fact that software actually existed in massive data centers that consumed huge amounts of energy.

The internet today

The internet continues to be central to modern life in the US, for better or for worse. Lockdowns during the COVID-19 pandemic made people even more reliant on their internet connections, while highlighting the need for more robust infrastructure. Although the federal government has made some important investments in improving internet access in rural areas, it also failed to fund the ACP, one of the most effective programs for helping people access the economic benefits of the internet.

The tech industry is still beloved by venture capitalists who continue to throw money into speculative bubbles like virtual reality and AI, neither of which have managed to deliver their promised return on investment so far. While this has been great for the hardware companies that manufacture their chips, it’s not clear if companies like Meta and OpenAI can continue to invest in these technologies until they finally start to break even. Wall Street seems to be getting a bit nervous.

While there are a lot of concerning trends, people are taking note and trying to do something about it. As federal support for low-income internet stumbled, places like New York have made progress in improving internet access on a more local level. Net neutrality protections, which were overturned in 2017, were reinstated in 2024.

The next few years could be pretty bumpy, depending on how things go, but it’s helpful to have a bit of perspective when reading the headlines. There’s still a lot of reasons to be optimistic about the future of the internet, and it’s motivating to see how far we’ve come.

Author - Peter Christiansen

Peter Christiansen writes about telecom policy, communications infrastructure, satellite internet, and rural connectivity for HighSpeedInternet.com. Peter holds a PhD in communication from the University of Utah and has been working in tech for over 15 years as a computer programmer, game developer, filmmaker, and writer. His writing has been praised by outlets like Wired, Digital Humanities Now, and the New Statesman.

Editor - Jessica Brooksby

Jessica loves bringing her passion for the written word and her love of tech into one space at HighSpeedInternet.com. She works with the team’s writers to revise strong, user-focused content so every reader can find the tech that works for them. Jessica has a bachelor’s degree in English from Utah Valley University and seven years of creative and editorial experience. Outside of work, she spends her time gaming, reading, painting, and buying an excessive amount of Legend of Zelda merchandise.